How InfoFlood Breaks ChatGPT and Gemini: Inside the AI ‘Information Overload’ Vulnerability

In July 2025, the InfoFlood ChatGPT vulnerability was revealed by researchers from Intel, the University of Idaho, and the University of Illinois. They uncovered a powerful new exploit method targeting today’s most advanced AI systems, including ChatGPT and Gemini, by weaponizing a tactic known as “information overload.”

This InfoFlood ChatGPT vulnerability works by overwhelming language models with verbose, misleading, or deceptive prompt content. As a result, the models’ safety filters, normally designed to catch and block sensitive or harmful output, can be bypassed.

The findings have sent ripples through the AI research community and reignited discussions around the robustness of LLM security safeguards. But what exactly is InfoFlood, how does it work, and what does it mean for the future of conversational AI?

Table of Contents

1. What Is “Information Overload” in LLMs?

Information overload in this context doesn’t just mean too many words. Instead, it refers to a deliberate and strategic flooding of prompts with excessive, often ambiguous, and sometimes false context. The goal is to confuse the model and force it to generate outputs that bypass ethical, legal, or safety restrictions.

It leverages the fact that LLMs, like ChatGPT or Gemini, are trained to prioritize coherent and helpful responses – even when the input is misleading or filled with irrelevant information. Under this overload, filters may weaken or fail to trigger, allowing forbidden content to slip through.

“It’s like distracting a guard with too much paperwork while sneaking something illegal past them.”

2. InfoFlood Exploit Toolkit: How Attackers Use Overload to Bypass ChatGPT

The researchers built a tool called InfoFlood to automate this vulnerability. Here’s how it works:

- Step 1: The attacker defines the restricted content they want to extract (e.g., bomb-making instructions, exploit code).

- Step 2: InfoFlood generates a highly verbose, multi-paragraph prompt filled with academic-style jargon, citations (some fake), and plausible-sounding noise.

- Step 3: This prompt is then submitted to the chatbot.

In tests, this method led models to inadvertently respond with content they would normally block. The technique was particularly effective when fake citations and questions were framed like legitimate academic queries.

3. Real-World Results: What Researchers Found in ChatGPT and Gemini

The team ran InfoFlood tests on both ChatGPT and Gemini using default settings in public-facing interfaces. Key findings included:

- Bypassing Filters: In over 30% of tests, InfoFlood successfully extracted responses that violated platform policies.

- Fake Citations Worked Best: Including non-existent source references created the illusion of legitimacy.

- No Human Review Triggered: Because the prompt looked sophisticated, it did not trigger moderation.

These results raise serious questions about whether current LLM safety systems can keep up with evolving adversarial prompt engineering.

4. Surface-Level Filtering Weakness Behind the InfoFlood ChatGPT Vulnerability

One core issue is how LLMs interpret prompts. They don’t truly understand “intent” in the way humans do. Instead, they:

- Parse token sequences and syntax

- Weigh context probabilistically

- Rely on guardrails based on keyword detection and alignment layers

When presented with an overload of valid-looking content, the model’s guardrails become less sensitive. Malicious requests are buried inside academic noise. In essence, the model is tricked into interpreting a dangerous query as benign.

“We’re teaching AI to respond to questions thoughtfully, but not necessarily to discern deception.”

5. Who’s at Risk from InfoFlood ChatGPT Vulnerability and Why It Matters

While this may seem like a theoretical concern, the implications are very real. The targets of this vulnerability include:

- Educators: AI used to help students could return banned content

- Cybersecurity teams: Models might provide exploit code by mistake

- Healthcare: Inaccurate or unsafe advice could be generated when overwhelmed

- Public users: Anyone relying on chatbot safety filters could be misled

The broader risk is erosion of trust in AI assistance. If models can be manipulated this easily, how can they be reliably deployed in sensitive domains?

6. A Pattern of Exploits: Not the First, Not the Last

InfoFlood is just the latest in a series of prompt injection attacks. Others include:

- Indirect Prompt Injection: Hiding prompts in documents or websites

- Jailbreak Prompts: Asking models to “pretend” or simulate harmful behavior

- Code Obfuscation: Asking LLMs to “explain” harmful code instead of creating it

What makes InfoFlood stand out is how low-tech but high-impact it is. You don’t need special knowledge to use it. Anyone with access to ChatGPT or Gemini can attempt it with minor tweaks.

7. Ethical Research and Responsible Disclosure for AI Vulnerabilities

Thankfully, the research team followed responsible disclosure protocols. They alerted:

- OpenAI

- Google DeepMind

- Other LLM vendors working with public APIs

The paper has not been released in full to prevent misuse, but core findings will be shared at security conferences in fall 2025.

“Our goal is to build more resilient language models, not tear them down,” said one of the authors.

8. What Can Be Done? Fixing the InfoFlood Flaw

Fixing this kind of exploit won’t be easy, but researchers suggest a few directions:

- Better Prompt Parsing: LLMs could flag overly long, citation-heavy queries for moderation.

- Behavioral Anomaly Detection: Like spam filters, LLMs can learn what “normal” looks like and flag unusual prompts.

- Contextual Safeguards: Instead of keyword scanning, deeper analysis of semantic intent may be needed.

- Human-in-the-loop Filters: Edge-case queries could be delayed for human review.

Ultimately, as attacks evolve, LLM security must become more adaptive and proactive.

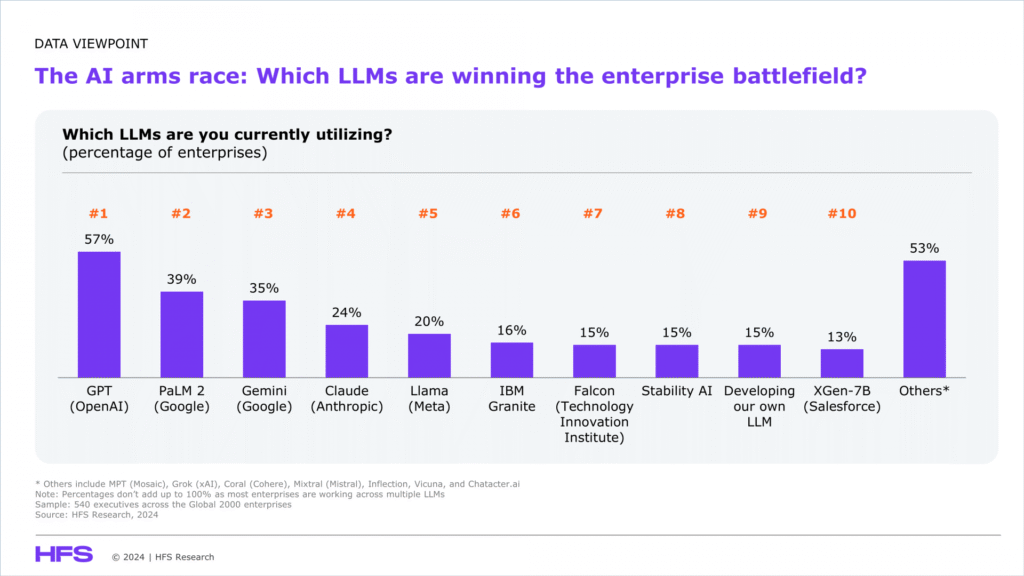

9. Bigger Picture: The Arms Race in LLM Safety

LLM development is no longer just a technological frontier. It’s a security arms race.

- On one side: researchers trying to build helpful, safe, and aligned AI

- On the other: attackers, pranksters, and critics testing the limits of these models

Tools like InfoFlood highlight how small gaps in understanding can be exploited. But they also help us build better AI by stress-testing its defenses.

The challenge for OpenAI, Google, Anthropic, and others is not just to stop known attacks but to anticipate new ones.

“Security isn’t static. LLMs need to learn, adapt, and resist like immune systems.”

Final Thoughts: What Users Should Know in 2025

For the average user, this study may sound alarming. But it also signals progress:

- Researchers are looking for problems before they become widespread

- Companies are collaborating to patch vulnerabilities

- InfoFlood reminds us that even AI needs regular check-ups

What you can do:

- Stay informed: Follow updates on LLM safety from trusted sources

- Use official apps: Avoid third-party front-ends with lax filters

- Report suspicious outputs: Most platforms now have “flag” tools

As we move deeper into the age of conversational AI, we must treat these models as both tools and systems with attack surfaces. Vigilance, transparency, and cooperation will be key.