ChatGPT Hallucination Turned Real: The Soundslice ASCII Tab Story

ChatGPT hallucination isn’t just a quirk of modern AI, it’s starting to shape product development in the real world. In a fascinating twist, Adrian Holovaty, founder of the music learning platform Soundslice, recently built a feature based on something ChatGPT made up.

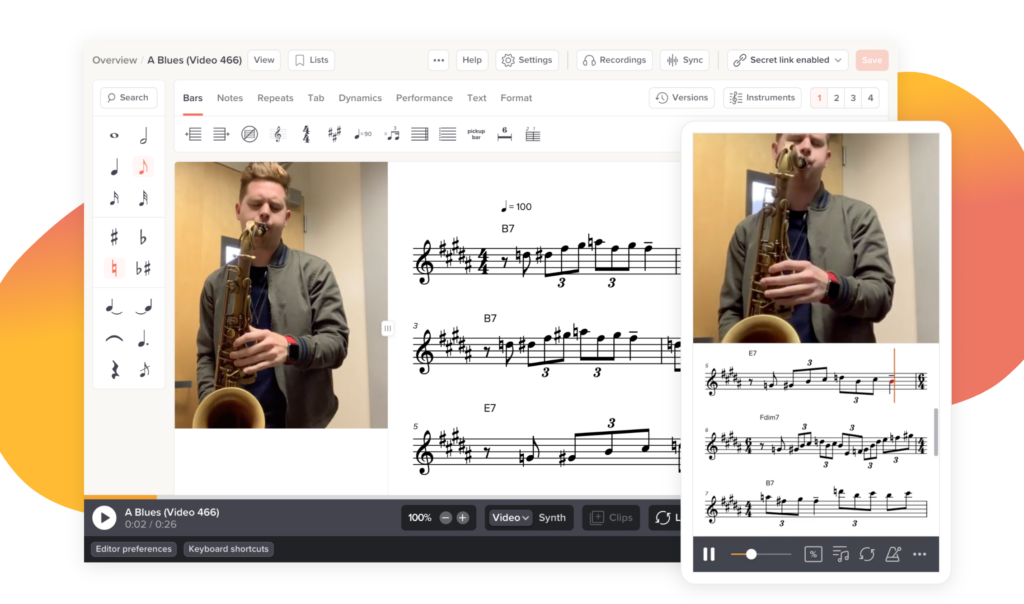

Earlier this year, Holovaty noticed a strange pattern in his platform’s error logs. Users were uploading screenshots of ChatGPT conversations. But these screenshots weren’t bugs, they were AI chats showing ASCII-style guitar tabs. People were trying to upload them into Soundslice’s “sheet music scanner” to hear the music.

Table of Contents

Why ChatGPT Hallucinations Mislead Users So Easily

So, what is a hallucination in the world of AI? A ChatGPT hallucination happens when the AI confidently produces false but plausible-sounding information. It isn’t lying, per se , it’s just making probabilistic guesses that happen to be wrong.

In this case, ChatGPT falsely claimed that users could upload ASCII tab screenshots into Soundslice and get playable audio. That wasn’t true , but users believed it. The hallucination was specific, consistent, and presented with confidence.

Want to learn more? Check out: Why AI Hallucinations Happen

ASCII Tabs vs Standard Notation: The Confusion Explained

ASCII tablature is a text-only way of showing guitar music. It looks like dashes and numbers arranged in rows, mimicking strings and frets. Common in forums and email threads, it’s a simple workaround when you don’t have real sheet music.

Soundslice’s sheet music scanner, however, was trained to read printed notation , actual musical staves, not dashes and digits in a monospace font. So when users uploaded ASCII tab images, the scanner had no idea what it was looking at.

The Logs That Didn’t Make Sense (Until They Did)

Initially, Holovaty was baffled. Why would people upload plain screenshots of text?

Curious, he ran his own ChatGPT session and asked about Soundslice. Sure enough, the bot confidently explained a feature that didn’t exist , the ability to convert ASCII tabs from images to playable music.

That’s when everything clicked: the AI was misinforming users, and they were following its advice.

But more importantly, it wasn’t just a few isolated cases , the uploads were consistent, deliberate, and spread across multiple days. This wasn’t confusion; it was belief. Users trusted the AI so much, they bypassed the platform’s own documentation and relied entirely on what ChatGPT told them.

When a Fake Feature Becomes a Real One

Faced with this odd influx, Holovaty had two choices:

- Add a warning saying ASCII tab screenshots weren’t supported

- Or… actually build what the AI was falsely promoting

He chose the latter.

“I’m happy to add a tool that helps people,” he wrote on his blog. “But I feel like our hand was forced in a weird way.”

Now, Soundslice can process ASCII tabs from screenshots. The hallucinated feature is real.

Should Businesses Respond to AI Misinformation?

The Soundslice story brings up a bigger question: should companies build what AI claims they can do , even if those claims are false?

Some developers compared it to a sales rep promising features the team hasn’t shipped. But in this case, the “sales rep” is a bot with no accountability.

This moment is part cautionary tale, part innovation roadmap.

Final Thoughts

What began as a ChatGPT hallucination ended as a real-world product update. Adrian Holovaty turned an error into an enhancement , something that helps users while illustrating how AI reshapes expectations.

It also proves that your best marketer might be an AI… and also your most unreliable one.

🔗 Explore the new feature at Soundslice.com