15 Biggest Artificial Intelligence Challenges to Tackle in 2025

Table of Contents

Artificial intelligence (AI) continues to transform our world at breakneck speed, impacting industries from healthcare to finance, education to entertainment. By some estimates, AI could contribute over $15.7 trillion to the global economy by 2030. Yet, despite its promise, AI also brings a host of complex challenges that span technical, ethical, legal, and societal realms. As we enter 2025, it’s more important than ever to understand these hurdles and work together on creative solutions.

Below, we explore the top 15 AI challenges expected to dominate conversations in 2025. For each, we’ll outline the core issue and suggest practical avenues for addressing it.

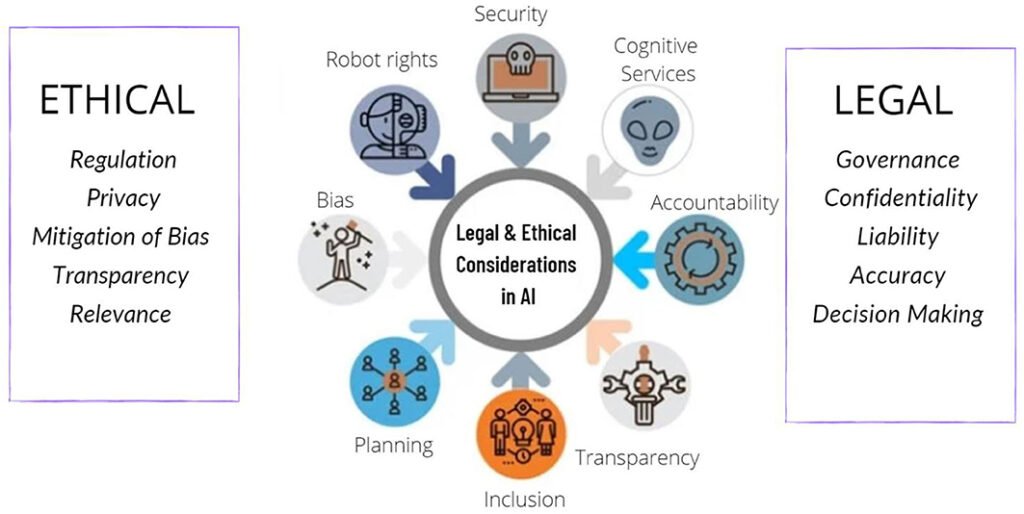

1. Ethical Quandaries in AI

As AI systems take on higher-stakes decisions, doctors diagnosing illnesses, judges reviewing parole eligibility, or even hiring algorithms screening resumes, questions of moral responsibility become pressing. How do we ensure that an AI-driven medical diagnosis respects patient privacy and consent? In the justice system, would an algorithm’s risk assessment unfairly penalize certain groups?

Proposed Actions

- Develop and adhere to robust ethics frameworks that guide AI design, testing, and deployment.

- Establish multi-stakeholder ethics boards (including ethicists, domain experts, and affected community representatives) to review high-impact AI projects.

- Incorporate “ethical audits” or third-party reviews before launching critical AI applications.

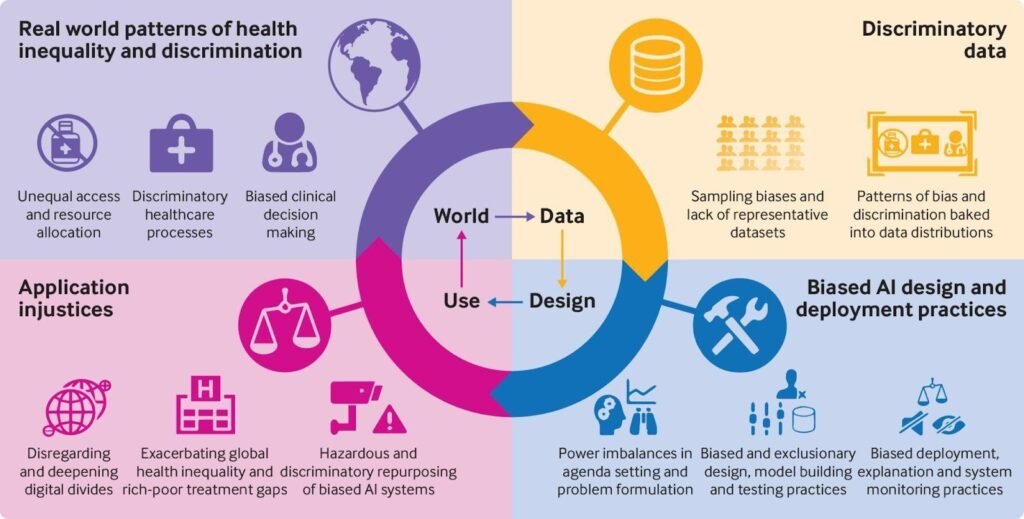

2. Bias and Fairness in Machine Learning

AI models learn patterns from data, and if that data reflects historical prejudices (e.g., gender or racial bias in hiring), the model can perpetuate or even amplify those biases. In 2025, bias remains a top concern, especially in sectors like criminal justice, credit scoring, and medical treatment.

Proposed Actions

- Enforce rigorous data audits to identify underrepresented groups or skewed feature distributions before training.

- Adopt fairness-aware algorithms, techniques such as reweighting, adversarial debiasing, or counterfactual fairness, to mitigate bias at the model level.

- Continuously monitor post-deployment outcomes; build feedback loops so human auditors can flag unfair behaviors and retrain models accordingly.

3. Seamless AI Integration into Legacy Systems

Many enterprises have spent decades building on legacy infrastructure. Introducing AI models, often requiring massive data pipelines, GPU-enabled servers, or cloud-native architectures, poses significant integration headaches. Organizations struggle to embed AI into existing workflows without major disruptions.

Proposed Actions

- Conduct a thorough “AI readiness” audit to map current systems, data quality, and organizational processes.

- Pilot small-scale AI proofs-of-concept that interface with legacy databases or applications via APIs, gradually expanding scope.

- Invest in upskilling and cross-functional teams: data engineers, software architects, and domain experts must collaborate on bridging old and new systems.

4. Enormous Computing Demands

Training advanced deep learning models, especially large language models (LLMs) or high-resolution computer vision networks, requires significant computing power. Even as dedicated AI chips (TPUs, neuromorphic processors) become more common, smaller organizations may lack the budget or access to affordable, eco-friendly compute resources.

Proposed Actions

- Explore distributed training and federated learning approaches to leverage idle compute across multiple nodes or edge devices.

- Partner with cloud providers offering subsidized GPU/TPU credits for research and smaller enterprises.

- Investigate emerging hardware innovations, neuromorphic chips, quantum-inspired accelerators, to boost efficiency and reduce energy consumption.

5. Safeguarding Data Privacy and Security

AI thrives on massive datasets, often containing personal, sensitive, or proprietary information. In 2025, the risk of data breaches, model inversion attacks, and unauthorized data access remains high. How can organizations harness data for AI without jeopardizing individuals’ privacy?

Proposed Actions

- Employ robust encryption methods, both at rest and in transit, to protect sensitive data from interception.

- Adopt privacy-preserving techniques such as differential privacy, homomorphic encryption, and secure multi-party computation, which let models learn from data without exposing raw inputs.

- Implement strict access controls, audit trails, and regular security pen-testing specifically focused on AI pipelines.

6. Navigating Legal and Regulatory Uncertainty

AI regulations are still evolving, across different countries and industries, rules around liability, intellectual property, and compliance vary widely. If an autonomous vehicle’s AI makes a fatal error, who is legally responsible, manufacturer, software developer, or end user? Clear legal frameworks are still lacking.

Proposed Actions

- Engage proactively with policymakers, legal scholars, and industry groups to help shape emerging AI regulations.

- Maintain thorough documentation of data provenance, model architectures, and decision logic to demonstrate compliance.

- Create interdisciplinary teams (legal experts, compliance officers, data scientists) to continuously monitor regulatory changes and update AI governance policies.

7. Ensuring AI Transparency and Explainability

Black-box AI models (e.g., deep neural networks) can achieve impressive accuracy but offer little insight into how they reach decisions. In high-stakes domains like healthcare or finance, stakeholders demand understandable explanations, “Why did the model flag this loan application as high-risk?”

Proposed Actions

- Integrate explainable AI (XAI) techniques, such as SHAP values, LIME, or concept-based saliency maps, to provide human-interpretable rationales.

- Build model cards and datasheets for datasets that document intended use cases, limitations, and known biases.

- When possible, favor inherently interpretable models (decision trees, generalized additive models) if accuracy trade-offs are acceptable.

8. Widespread Lack of AI Literacy

Even as AI penetrates everyday life, voice assistants in our homes, recommendation engines online, chatbots in customer service, many users and decision-makers still struggle to understand AI’s capabilities and limitations. Misconceptions can lead to misplaced trust or undue fear.

Proposed Actions

- Launch accessible AI literacy initiatives, online courses, community workshops, and interactive demos, to demystify core concepts.

- Encourage universities and companies to incorporate AI ethics and basic ML principles into general curricula.

- Develop “AI explainers” in products and services, short, intuitive pop-ups or guided tutorials that reveal how and why the AI made a specific recommendation.

9. Building and Maintaining User Trust

Trust is the cornerstone of AI adoption. If users fear data misuse, opaque decision-making, or unexpected biases, they won’t embrace AI-driven services. How can organizations cultivate and preserve that trust?

Proposed Actions

- Emphasize transparency: publicly share model performance metrics, known limitations, and real-world accuracy benchmarks.

- Provide clear opt-in/opt-out controls for data collection and algorithmic personalization.

- Perform regular third-party audits of AI systems to verify fairness, security, and compliance, then share summary reports with stakeholders.

10. Addressing the “Black Box” Explainability Gap

Closely related to transparency, explainability specifically tackles the technical challenge of revealing internal decision paths within complex models like transformers or convolutional neural networks. Without explainability, diagnosing errors or biases becomes nearly impossible.

Proposed Actions

- Advance research into XAI subfields, counterfactual explanations, prototype-driven interpretations, and model distillation, to surface intelligible insights.

- Mandate explainability standards for AI systems used in regulated sectors (e.g., healthcare, finance, criminal justice).

- Maintain a “human-in-the-loop” paradigm: ensure that critical decisions (e.g., medical diagnoses or parole recommendations) involve human oversight and aren’t fully autonomous.

11. Preventing Discriminatory Outcomes

Discrimination in AI can arise when underrepresented groups receive lower-quality service due to skewed training data or flawed objective functions. For example, a recruitment AI might favor candidates from certain universities or demographics if historical hiring data is biased.

Proposed Actions

- Implement fairness checks at multiple stages: pre-training (dataset balancing), in-training (penalize biased predictions), and post-deployment (audit real-world outcomes).

- Employ fairness metrics, statistical parity, equalized odds, disparate impact ratio, to quantitatively measure discriminatory behavior.

- Engage domain experts and representatives from vulnerable communities during model design to identify potential blind spots early.

12. Managing Unrealistic Expectations

The hype around AI can lead organizations, investors, and the public to expect near-magical results. When reality falls short, model performance doesn’t meet lofty goals, or implementation takes far longer, disillusionment can set in.

Proposed Actions

- Foster realistic goal-setting: clarify what AI can and cannot do in your specific context.

- Run small-scale pilots with well-defined success metrics before scaling.

- Share both successes and failures transparently to build a culture of iterative learning, rather than one of “AI or bust.”

13. Crafting Effective Implementation Strategies

Even when an organization recognizes AI’s potential, devising a coherent implementation plan can be daunting: choosing the right use case, securing quality data, selecting appropriate algorithms, and aligning stakeholders.

Proposed Actions

- Start with a clear business problem: identify pain points that AI can plausibly address (e.g., customer churn prediction, supply chain optimization).

- Form a cross-functional AI task force, data engineers, domain experts, IT security, legal counsel, to define requirements, success criteria, and risk mitigation steps.

- Develop a phased rollout: begin with a minimal viable model (MVM) in a limited environment, gather feedback, iterate, then expand gradually.

14. Protecting Data Confidentiality

AI thrives on data, often requiring sensitive personal or proprietary information. Even seemingly innocuous metadata can reveal patterns that threaten individual privacy. In 2025, as data breaches become more sophisticated, safeguarding data confidentiality is paramount.

Proposed Actions

- Employ end-to-end encryption for data at rest and in transit, and, when possible, use homomorphic encryption or secure enclaves so models can operate on encrypted data without decryption.

- Adopt privacy-preserving learning techniques, federated learning, differential privacy, or secure multi-party computation, so raw data never leaves user devices or secure silos.

- Maintain strict access controls, audit logs, and regular penetration testing to ensure no unauthorized data access or leakage.

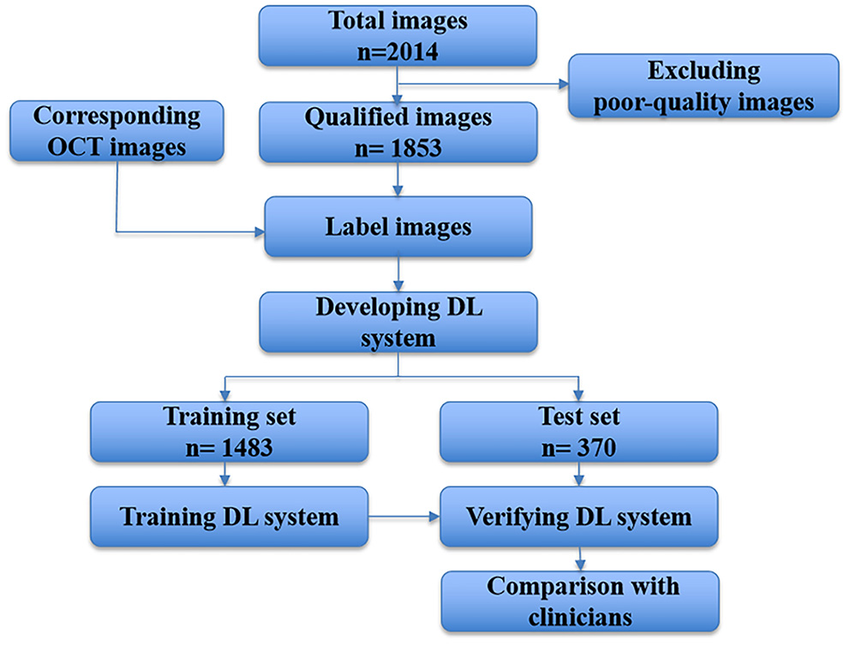

15. Preventing AI Software Malfunctions

When AI systems fail, whether due to unexpected edge cases, data drifts, or software bugs, the consequences can be severe: misdiagnoses in healthcare, trading errors in finance, or even safety hazards in self-driving cars.

Proposed Actions

- Establish thorough testing pipelines that include unit tests, integration tests, and adversarial robustness checks (evaluate how the model behaves with intentionally perturbed inputs).

- Adopt continuous monitoring: track model performance metrics (accuracy, precision/recall, calibration) over time and set up alerting thresholds when performance drifts.

- Develop clear incident response plans: define roles, communication channels, and rollback procedures should an AI-driven system exhibit anomalous or dangerous behavior.

Overcoming AI Challenges: A Roadmap

Addressing these 15 challenges requires a strategic, multi-pronged approach. Below are recommended steps for organizations aiming to maximize AI’s benefits while mitigating risks:

- Establish Ethical Guidelines & Governance

- Create AI ethics committees to review proposed projects, ensuring alignment with human rights, fairness, and societal well-being.

- Maintain clear documentation, model cards, datasheets, that outline intended use, data sources, and known limitations.

- Create AI ethics committees to review proposed projects, ensuring alignment with human rights, fairness, and societal well-being.

- Implement Bias Mitigation Measures

- Audit datasets for representation gaps; use data augmentation or reweighting techniques to correct imbalances.

- Apply fairness-aware algorithms during model training and evaluate outcomes using multiple fairness metrics.

- Involve diverse stakeholder groups in model validation to catch subtle biases early.

- Audit datasets for representation gaps; use data augmentation or reweighting techniques to correct imbalances.

- Prioritize Explainability & Transparency

- Integrate explainable AI methods (e.g., SHAP, LIME) to provide human-friendly justifications for AI outputs.

- Offer users control over data collection and usage (clear opt-in/opt-out frameworks).

- Publish white papers or public summaries describing algorithmic approaches, especially for high-impact use cases.

- Integrate explainable AI methods (e.g., SHAP, LIME) to provide human-friendly justifications for AI outputs.

- Strengthen Data Privacy & Security

- Encrypt sensitive data at every stage, collection, storage, processing, and deletion.

- Use federated learning or differential privacy when handling personal or proprietary information.

- Conduct regular security audits, penetration tests, and vulnerability assessments on AI infrastructure.

- Encrypt sensitive data at every stage, collection, storage, processing, and deletion.

- Foster AI Literacy & Manage Expectations

- Develop internal and public-facing educational programs that explain AI’s capabilities, limitations, and ethical considerations.

- Set realistic timelines and KPIs for AI initiatives, start small, measure impact, iterate.

- Share both successes and failures openly to encourage a learning-oriented culture.

- Develop internal and public-facing educational programs that explain AI’s capabilities, limitations, and ethical considerations.

- Ensure Legal Compliance & Clear Accountability

- Work closely with legal teams to track evolving regulations (GDPR, CCPA, AI governance acts) and ensure compliance in data handling and AI deployment.

- Maintain detailed logs of model versions, data lineage, and decision pathways to help determine liability if something goes wrong.

- Define accountability structures: who is responsible if an AI system malfunctions or causes harm?

- Work closely with legal teams to track evolving regulations (GDPR, CCPA, AI governance acts) and ensure compliance in data handling and AI deployment.

- Invest in Scalable Infrastructure & Skill Development

- Leverage cloud providers’ AI/ML services (managed GPUs/TPUs, AutoML platforms) to balance performance and cost.

- Explore emerging hardware options, neuromorphic chips, energy-efficient accelerators, to reduce energy footprints.

- Upskill technical and non-technical staff: data engineers, ML engineers, domain experts, and business leaders must collaborate and learn from one another.

- Leverage cloud providers’ AI/ML services (managed GPUs/TPUs, AutoML platforms) to balance performance and cost.

AI’s Evolution in the Business World

Even as we grapple with these 15 challenges, the future of AI in business shines bright. By 2025 and beyond, expect to see:

- Automated Repetitive Workflows: Mundane tasks, data entry, basic customer support, routine quality checks, will be handled by AI, freeing human employees for creative and strategic endeavors.

- Data-Driven Decision Making: AI analytics will sift through mountains of structured and unstructured data in real time, uncovering patterns that guide smarter business decisions and faster pivots.

- Hyper-Personalized Customer Experiences: From tailored product recommendations to real-time dynamic pricing and personalized marketing campaigns, AI will help businesses treat every customer as an individual.

- Competitive Differentiation & Loyalty: Early AI adopters who solve complex problems, predictive maintenance, risk modeling, supply chain optimization, will gain lasting advantages in their markets.

- Predictive Market Trends: AI’s predictive analytics will foretell emerging consumer behaviors, economic shifts, and supply-chain bottlenecks, enabling proactive rather than reactive strategies.

- Deepening AI–Human Collaboration: As AI takes on data-heavy, repetitive tasks, human professionals will focus on critical thinking, complex problem-solving, and ethical oversight, creating a synergy that drives innovation forward.

How does AI affect jobs and the workforce?

AI automates routine, repetitive tasks, leading to displacement in some roles, but also spawns new opportunities in AI development, data science, and ethics oversight. Reskilling and upskilling programs are crucial to help workers transition into AI-augmented positions.

How can we secure AI systems?

Securing AI requires a multi-layered approach: encrypt data at rest and in transit, adopt secure model-serving platforms, regularly audit code and infrastructure, and employ privacy-preserving methods (differential privacy, federated learning) to minimize data exposure.

What’s the key to tackling AI’s biggest challenges?

Interdisciplinary collaboration is essential, bringing together technologists, domain experts, ethicists, lawyers, and end-users to co-design, audit, and govern AI systems responsibly.

What four main problems can AI solve?

Automation of Repetitive Tasks: Streamlining workflows like data entry and basic customer support.

Process Optimization: Enhancing efficiency through predictive analytics, supply-chain forecasting, and dynamic pricing.

Outcome Prediction: Leveraging historical data to forecast trends, be it equipment failures, disease outbreaks, or financial market shifts.

Personalization: Tailoring user experiences via recommendation engines, chatbots, and adaptive interfaces.

AI’s immense potential comes hand-in-hand with complex challenges. By staying informed, collaborating across disciplines, and building transparent, ethical, and robust AI systems, we can harness its power while safeguarding individual rights and societal well-being. Here’s to shaping a responsible AI-driven future in 2025 and beyond!